The Graphics Pipeline (do not skip this)

- yarbrough08

- May 31, 2015

- 4 min read

The OpenGL Shading Language (GLSL) is the shading language employed by OpenGL (Open Graphics Library). One of OpenGL's main advantages over other graphics APIs is that it is cross-platform (Windows, Linux, Mac) and multi-vendor (NVidia, AMD/ATI, Intel).

DirectX, which uses the High Level Shading Language (HLSL), only supports the Windows platform and is known to be quite hard to develop with. Contrary to popular belief, all of the features of DirectX are available with OpenGL (if not pioneered by OpenGL). I am by no means trying to bash DirectX (I wish them continued success), but a lot of people seem to hold DirectX in a different category than OpenGL - and that contrast is irrelevant.

Vulkan, the successor to OpenGL (previously codenamed glNext), will also begin by supporting GLSL ensuring continuing support for the shading language.

The first step in learning a shading language is to understand the graphics pipeline.

This will be a simplified familiarization of the graphics pipeline.

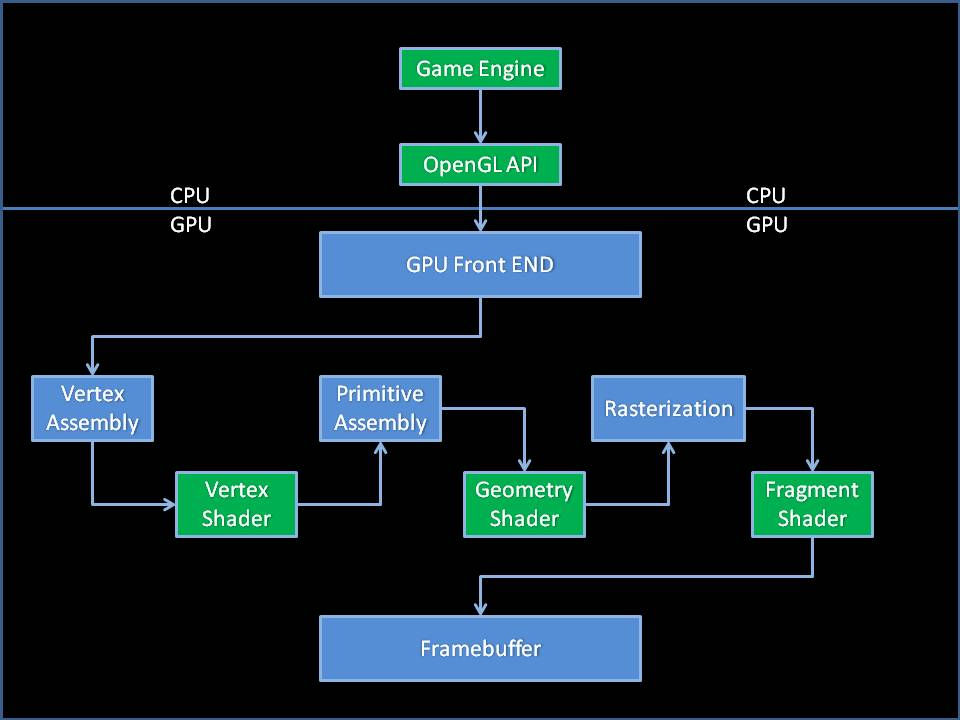

The game engine sends information to the GPU through the OpenGL API. The information sent will depend either on what you setup, or what the game engine has setup. For the Blender Game Engine, this is somewhat limited. The BGE sends some attributes, but not all of them - more on that later.

The GPU starts by processing the information and sorting it per-vertex. It then sends that information to the Vertex Processors for shading. It does this one vertex at a time, meaning that the Vertex Shader is run once for every vertex in the shaded model. The Vertex Shader can edit vertex data, but it cannot add or delete vertices. The output of the vertex shader is sent for primitive assembly.

Primitive assembly is where individual vertices are connected to form a triangle primitive. The newly formed primitive is sent to the Geometry Processors. The Geometry Processor is where our Geometry Shader takes over. The Geometry Shader can add, edit and delete vertices. This shader is run once for every triangle/face on the shaded model, useful for editing data that does not need to be processed for every vertex (which would be slower).

The geometry shader sends it's output for rasterization, this is where the model is assembled into fragments. The fragments (also known as pixels) are sent to the Fragment Processors to run through the Fragment Shader. The Fragment Shader is run for every pixel that will be displayed on the screen, and of the different shader stages is the most expensive. For this reason, GPU's have a fairly high number of fragment processors. The Fragment Shader ultimately determines the color of the model, and sends the output to the Framebuffer to display on the screen.

Here is a simplified chart to show the different stages of the pipeline. Areas that can be altered with programming are shaded green, areas that are fix-functioned are shaded blue. Note: The OpenGL API is only partially programmable with the BGE (the OpenGL wrappers), and the framebuffer is also not accessible with the BGE.

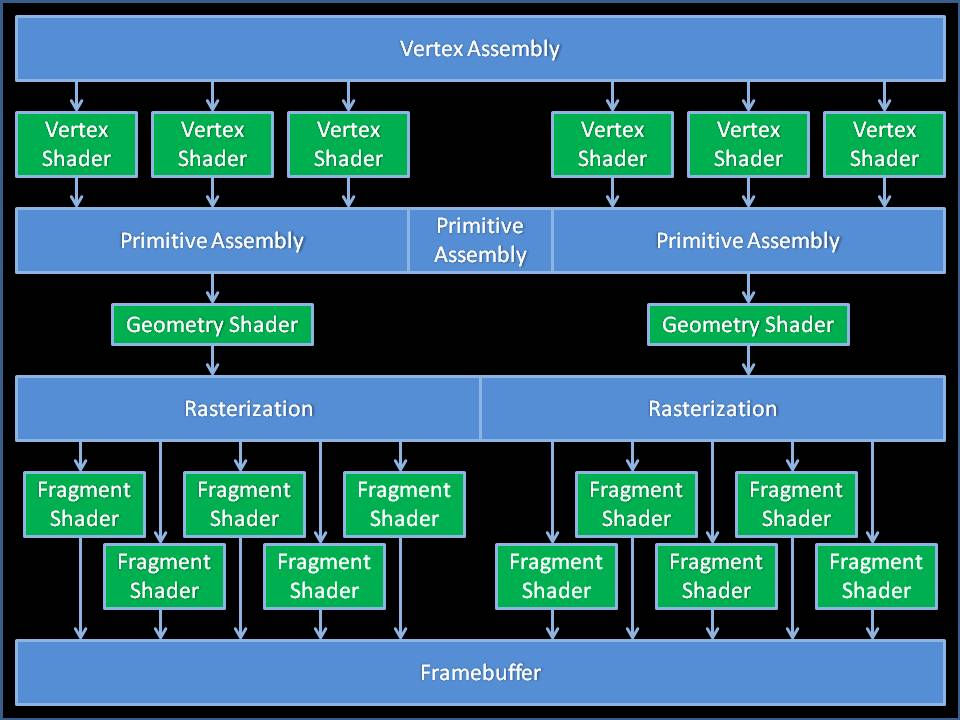

I should also note that these processors (vertex/geometry/fragment) are not alone, there are numerous amounts of each, and GPUs are the king of parallel processing. The ability of GPUs to efficiently process in parallel is why so many are turning to GPUs to speed up their processing.

For example consider vertex processing; the GPU does not simply wait until the information on one vertex is processed before sending another through, it sends multiple vertices through multiple processors and assembles them afterwards. The GPU keeps track of the various vertices and when a primitive can be assembled it sends it on its way while it is still processing other vertices. There is a specific order that vertices correspond to within a primitive, but that only matters for geometry shaders (as far as programmable purposes) - and I'll talk more about that when we discuss those shaders.

Here is a chart to demonstrate how shaders are parallel-processed.

Even though GPUs can efficiently parallel-process data, they are not all powerful. There are limits at each shader stage, both in performance and limitations within GLSL. I'll get into more detail about limits emposed by GLSL at a later point, but performance limitations I want to bring up now.

Lets use the fragment shader as an example, and a 2d filter as the code that will run through it. On the blenderartists forum, I see a lot of people complaining about the performance of 2d filters. A 2d filter runs for every pixel on the screen, at 1080p that is 2,073,600 pixels. If the code contains 1,024 calculations that equals 2,123,366,400 cycles that the GPU must render per frame along with everything else in the scene, and it is suppose to do that 60 times per second. It is easy to see how those calculations can add up. GPUs are becoming faster everyday, but they are not infinitely fast. When we get into shader programming, try to keep this in mind.

Comments